Hootsuite

Video Links

YouTube link: https://www.youtube.com/watch?v=UkcvMUsCfQE&feature=youtu.be

Vimeo link: https://vimeo.com/260039428

’24 Hours in Melbourne’

Background

Melbourne is a city of diversity. Various kinds of food, art, events are accessible here. However, Melbourne, as a name of a city, is fundamentally neutral. It’s the people here who activate the vigor, create tremendous possibilities and ultimately set the city apart. Thus, in terms of “24 hours in Melbourne”, I decided to explore the people living in Melbourne. I captured four photos from four different age groups and professions. And they all gave me the permission to post their photos online after I promised that the photos were only for assignment use.

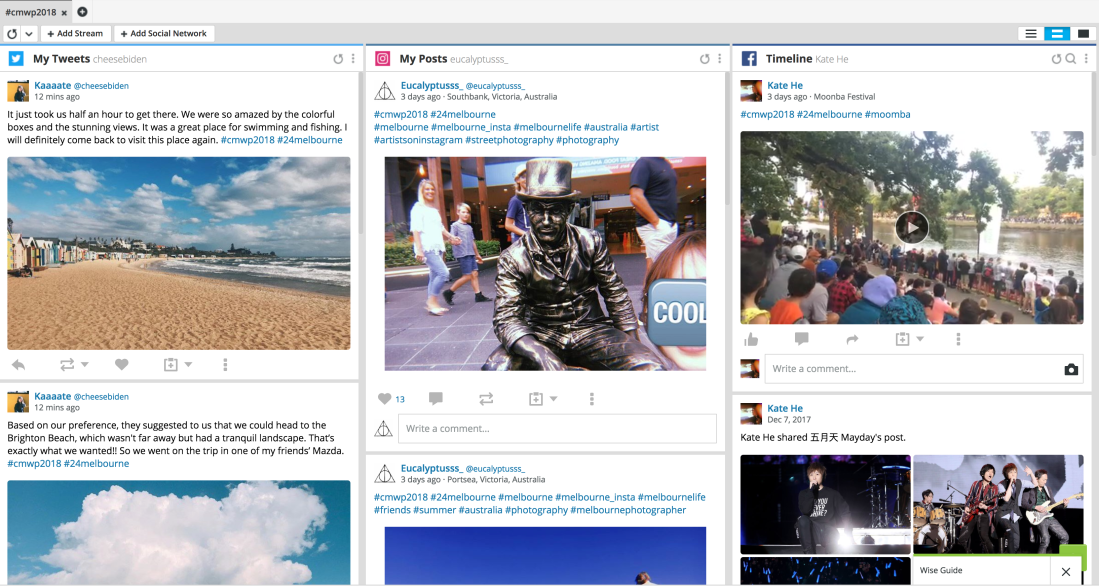

When I reflect on my photo shoots, I appeared to find an issue. My project is quite similar to “the Humans of New York (HONY)”. HONY reveals different kinds of moods on its website, either positive, neutral or negative. By contrast, my project seems to be one-sided. It appears to be common when I browsed the #cmwp2018 Instagram feed. I found that almost all the content was positive or at least neutral, none of them expressed negativity. Besides, another thing that I’m curious about is the personalization algorithm of Facebook. I recognized that the order and content displayed were all well-designed. And the idea that Facebook influenced the U.S. election in 2016 makes me even more interested in its mechanism.

Personalization algorithms and Echo Chamber

According to Diakopoulos (2014), personalization algorithms are often called “black boxes”, because they provide important clues about operational decisions but are difficult to penetrate. When users log into their Facebook pages, the algorithm scans and collects everything posted within the past weeks by their friends and the Facebook pages they have liked (Oremus 2016). Based on the mechanism, Huffington Post (2016) once criticized that algorithm suppresses the diversity of the content people see in their feed by occasionally hiding what they may disagree with and letting through what they are likely to agree with, which leads to echo chamber. It’s defined as a situation where beliefs are amplified or reinforced by communication and repetition inside a closed system. And this may increase political and social polarization and extremism (Barberá et al. 2015). The most influential outcome of echo chamber was the U.S. election in 2016, where liberals were all surprised by the result of election because of the illusion that cross-cutting news on Facebook caused.

On the other hand, Hosanagar (2016) claimed that the primary driver of the digital echo chamber was the actions of users who fed the data to the personalization algorithms. But she also insisted that internet companies should make more progress on the issue.

In Retrospect

In digital era, everybody can both be a social media user and a content creator. To avoid getting stuck in echo chamber, as users, apart from urging internet companies to improve the algorithms and make them more transparent, we should also listen to the voices on the other side instead of simply acquiring information in a closed chamber. As content creators, we need to be aware of the comprehensiveness of our content by consciously displaying various voices and opinions in the process of production. That’s not to say all the content must be politically right, but we need to deliver diversity and objectivity to our audiences.

References

Diakopoulos, N 2014, Algorithmic Accountability Reporting: On the Investigation of Black Boxes, Columbia University Academic Commons, <https://doi.org/10.7916/D8ZK5TW2 >.

Luckerson, V 2015. ‘Here’s How Facebook’s News Feed Actually Works.’ Time, 9 July, viewed 12 March 2018, < http://time.com/collection-post/3950525/facebook-news-feed-algorithm/ >.

Oremus, W 2016. ‘Who Controls Your Facebook Feed?’ Slate, 3 January, viewed 12 March 2018, <http://www.slate.com/articles/technology/cover_story/2016/01/how_facebook_s_news_feed_algorithm_works.html >.

Tufekci. Z 2016, ‘Facebook Said Its Algorithms Do Help Form Echo Chambers. And the Tech Press Missed It.’ Huffington Post, viewed 13 March 2018, <https://www.huffingtonpost.com/zeynep-tufekci/facebook-algorithm-echo-chambers_b_7259916.html >.

Barberá et al. 2015, ‘Tweeting from left to right: Is online political communication more than an echo chamber? ’ Psychological science, vol.26, no.10, p. 1531

Hosanagar. K 2016, ‘Blame the Echo Chamber on Facebook. But Blame Yourself, Too.’ The Wired, 25 November, viewed 13 March 2018, <https://www.wired.com/2016/11/facebook-echo-chamber/ >.